The Danger of Scientific Hype

Does the publication process reward novelty at the expense of good science?

By Taylor McNeil

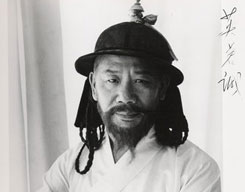

Some scientists may be forced to downplay limitations in their work or boost the significance of their findings to get in top-tier publications, says John Ioannidis.

You see it in the headlines: a new study in a scientific journal shows that a particular food has a surprising health effect—time to stock up. But are those kinds of studies representative of good research, or the result of a publication process that rewards novelty at the expense of careful science?

John Ioannidis, an adjunct professor at the School of Medicine, and two colleagues worry that there is indeed a tendency in the biomedical science publications to favor more extreme results. Because only a small percentage of research submitted for publication makes it into print, especially in top-tier journals, researchers must hype their results to be included, Ioannidis argues.

In publications such as Nature and Science, “the more extreme, spectacular results (the largest treatment effects, the strongest associations, or the most novel and exciting biological stories) may be preferentially published,” write Ioannidis and co-authors Neal S. Young and Omar Al-Ubaydli in a recent issue of PLoS-Medicine. And that’s not good, because those results often skew future research.

“If other scientists take the prestigious journals’ results for granted instead of trying to replicate or refute them objectively, they are forced to follow the same path to be fashionable, receive funding and get promoted,” Ioannidis says.

Ioannidis views scientific information as a commodity, and likens the decisions about publication practices to oil firms bidding for drilling rights. Oil companies base their bids on estimates of the oil reserves. The average of all the firms’ estimates is usually close to the actual reserve, but the high bid is obviously based on the most optimistic estimate, and as a result sometimes loses the bidder money. It’s known in the trade as “winner’s curse.”

Ioannidis believes that the choices made by journal editors amount to the same thing, choosing the “high bid”—in this case, the most spectacular findings—which might not be representative of the relevant scientific research. Ioannidis cites a 2005 study he did that found initial clinical studies were often unrepresentative and misleading. He also found that they are frequently the ones that get the most notice in the scientific literature.

“Exploratory analyses are regarded as highly appropriate, fascinating, or even a ‘must’ in many scientific fields, but the problem is when we don’t know exactly how much exploration and data dredging has preceded a specific research claim,” he says.

Ioannidis charges that some scientists “probably self-select their results for the branding conferred by publication in top journals. I don’t think they publish fake results, but they may be forced, for example, to downplay limitations in their work, or boost the significance of their findings in their presentation.”

The consequences could indeed be serious. “The scientific biomedical literature will form the basis of what is used in everyday medical practice,” he says. “Thus, the risk exists that patients and consumers of health care will be exposed to medical tests, interventions or other technology that are not as helpful as they seem and may even be harmful.”

Although the journals have editors who are professionals in their fields and include active peer-reviewers, “if the selection is based simply on what seems most exciting and likely to have people herding after it, rather than what is high quality and truly innovative, this could create a problematic situation,” Ioannidis says.

How can the situation be improved? Ioannidis suggests that journals should more often publish research that shows negative results, and only include positive research that has reproducible results.

Quoting Karl Popper’s The Logic of Scientific Discovery, he and his colleagues write, “If ‘the advance of science depends upon the free competition of thought,’ we must ask whether we have created a system for the exchange of scientific ideas that will serve this end.”

Taylor McNeil can be reached at taylor.mcneil@tufts.edu.